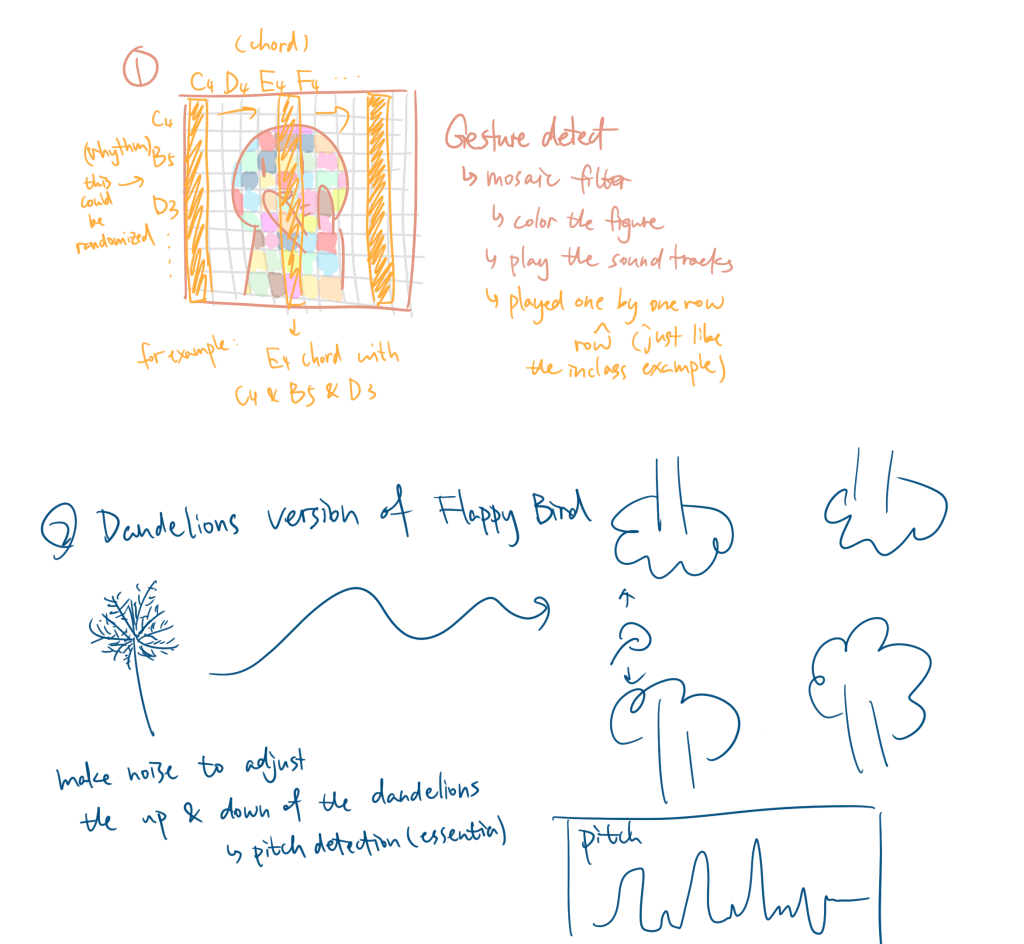

When I first conceptualized this assignment, I originally wanted to create a real-time interactive gesture-based study where you could compose a melody. However, after looking at the class resources on pitch detection, I thought that would be fun, so I chose option 2. My initial idea was to have a dandelion blown into the air, and the goal would be to help it fly as far as possible. The audience’s pitch would be detected using pitch detection, ideally detecting the exact pitch. The game was inspired by Flappy Bird, but sound would be used to control the dandelion.

At first, I wanted to use pitch detection to control the game, but I ran into issues with essentia.js, as the detected pitch fluctuated too much. For example, one moment it would be around 300 Hz, and the next, it would spike to over 4000 Hz, making it impossible to map correctly. Because of this, I had to use volume instead to control the dandelion’s vertical position.

Since this is a MELODY assignment, I also want to integrate the sound into it, and I was thinking of playing different pitches based on the y-value of the flower. But this flower changes really fast, sometimes spiking to the top in a split second, so that option was discarded. Then I wondered if I could use essentia.js to detect the intonation of my speech, and then play audio of the same pitch as I flew past each tree. For example, if I’m singing C, then when this flower flies over a tree, it will also play C. But this didn’t work because the notes detected by Essentia are not very accurate and too complicated for me to implement.

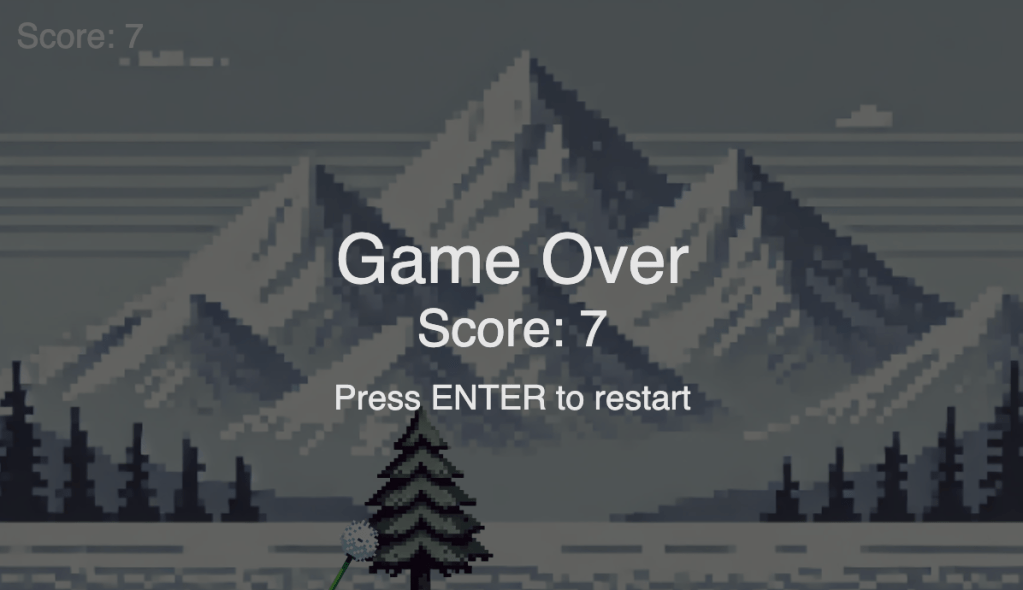

And this is what I have for the final outcome.

PS: This project was really hard on my voice because I had to howl constantly. If possible, please don’t watch my video, it’s so embarrassing—play it and experience it yourself!

Leave a comment