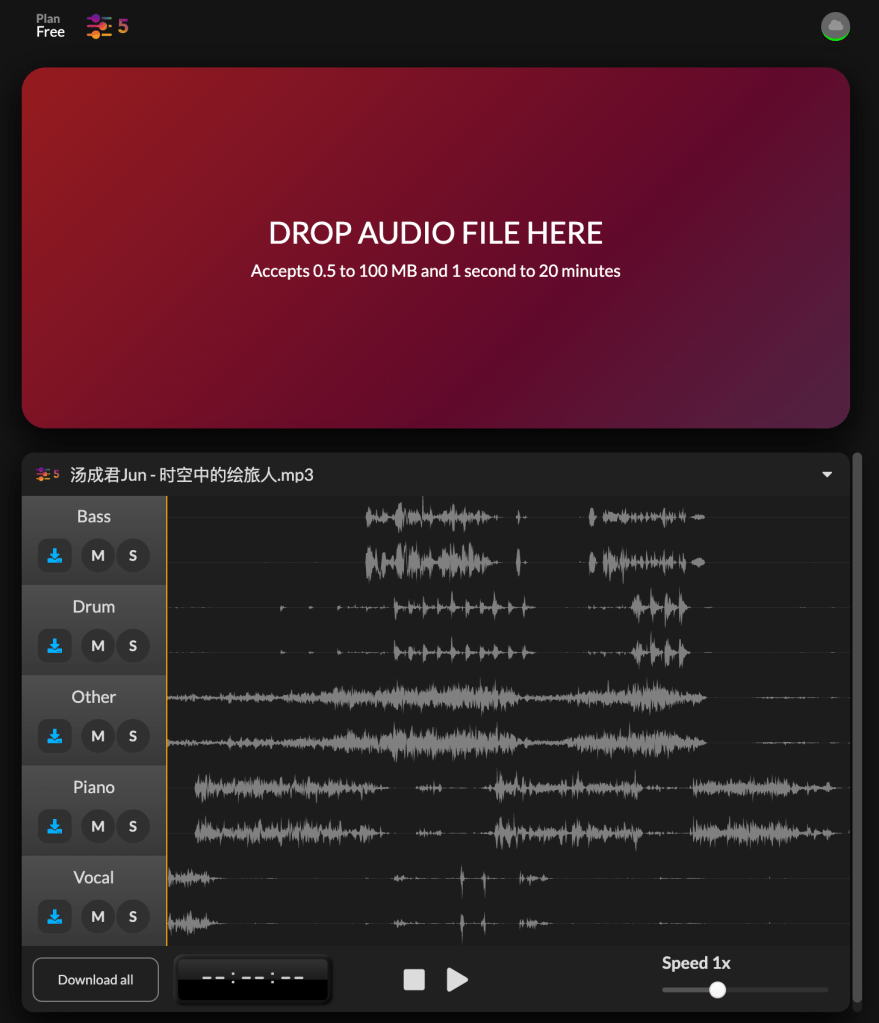

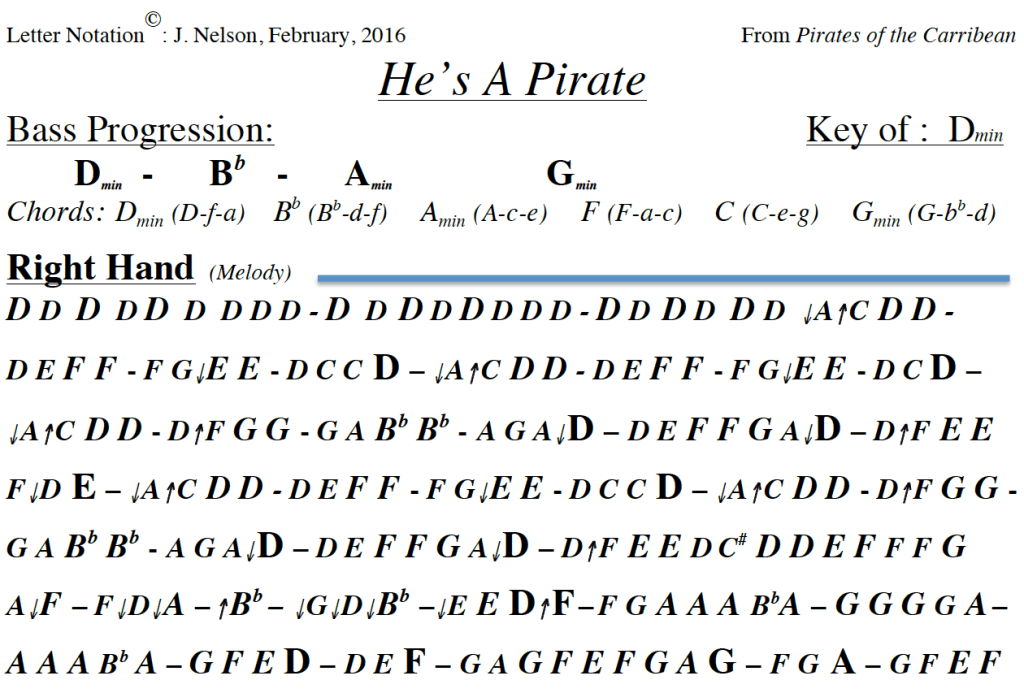

Based on the examples and assignments from class, I was going to use code to play the song I picked last week – He’s a Pirate. So first I went and found some sheet music.

Then I wrote them as notes and chords so that Tone.js would recognize it.

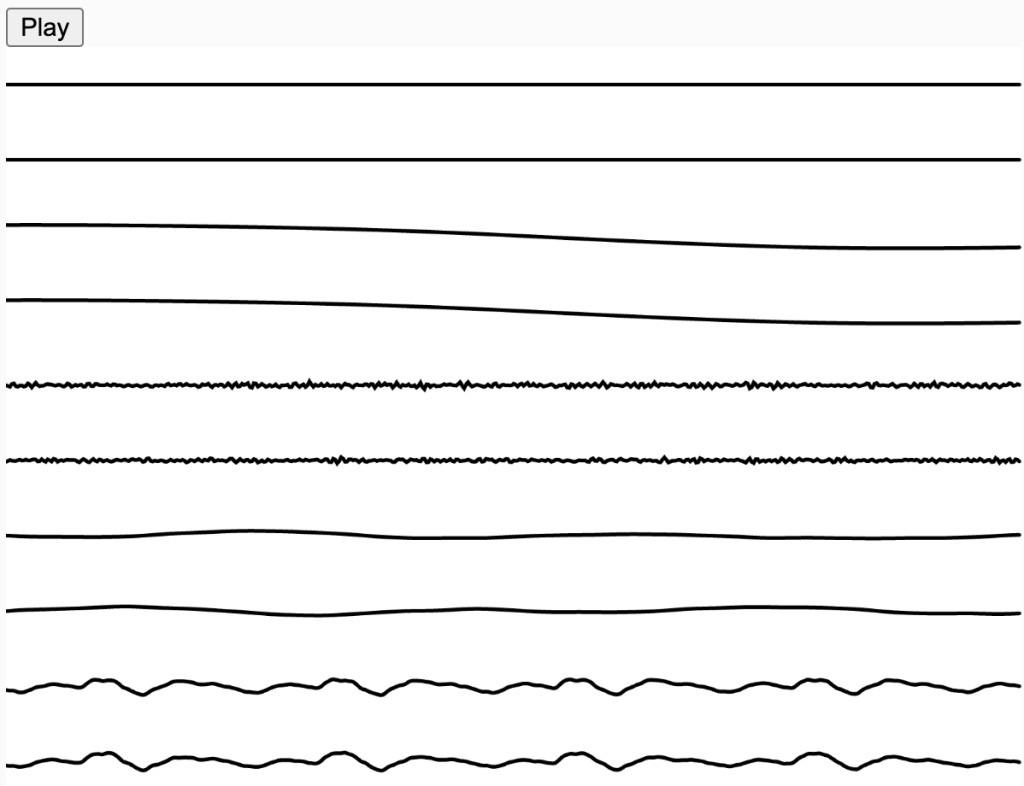

After that, I started incorporating the chords and rhythms into p5.js. It worked at first, but I don’t know what went wrong with the transfer timeline, even though I set the time signature to 6/8, it still couldn’t read certain parts of the timeline. I assumed that the first number indicated the number of bars and the second the number of beats, so when I set the time signature to 6/8, there would be six beats per bar. But it turns out that Tone.js doesn’t think so. I couldn’t find any more information about this transfer timeline either, so I’ll just have to live with it.

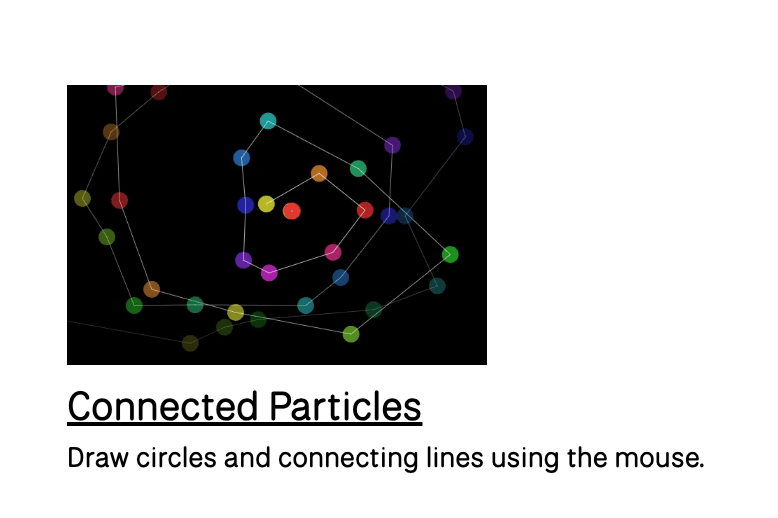

After completing the music section, I used the concordant/discordant visuals in this project. As a result, it really compliments the music visually.